Cluster Option

The Cluster Option allows users to speed up the solving process by launching multiple connected FLAC3D instances in cluster computing environments.

Cluster Computing in FLAC3D

Introduction

Cluster computing is a type of computing where multiple computers are connected as a cluster and execute operations together as a single entity. Software on each node (computer acting as a server in a cluster) does the same task simultaneously and coordinates the use of many processors using parallel computing techniques, which provides enhanced computational power and resolves the demand for content criticality and process services in a faster way.

The Cluster option in FLAC3D utilizes its cluster computing mode under a leader-follower architecture (Figure 1). Each node in the cluster network runs a FLAC3D instance, and all the FLAC3D instances are connected through Message Passing Interface (MPI) for data communication and management.

Figure 1: FLAC3D cluster computing architecture.

The leader is the head node where all the MPI and FLAC3D commands get initiated. The FLAC3D instance on the leader node works in active mode and manages all the general FLAC3D data processing, including:

Save and restore the model status

Create and modify the topology: zones, gridpoints, attaches, zone joints, etc.

Configure physical properties

Initiate FISH calls

It also handles many MPI-specific functionalities, including:

Utilize domain partition algorithm to split the model for parallel computing

Distribute model data to followers

Gather model data from followers

Manage global data exchange between cluster nodes

The followers are non-leader nodes in the cluster environment with running FLAC3D instances. The FLAC3D instances on follower nodes do not attempt to take user inputs. They work in passive mode while not cycling and are activated when data is distributed from the leader.

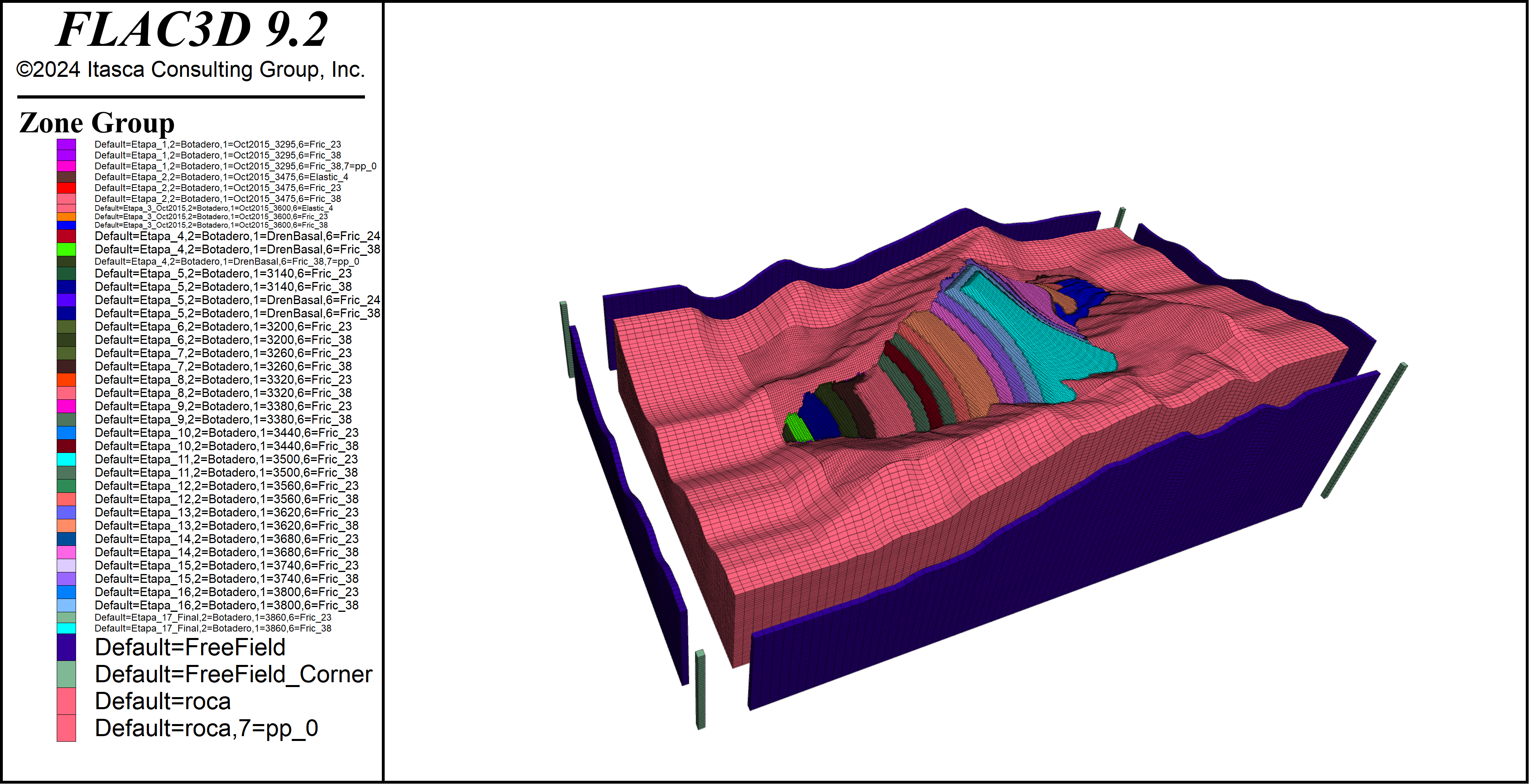

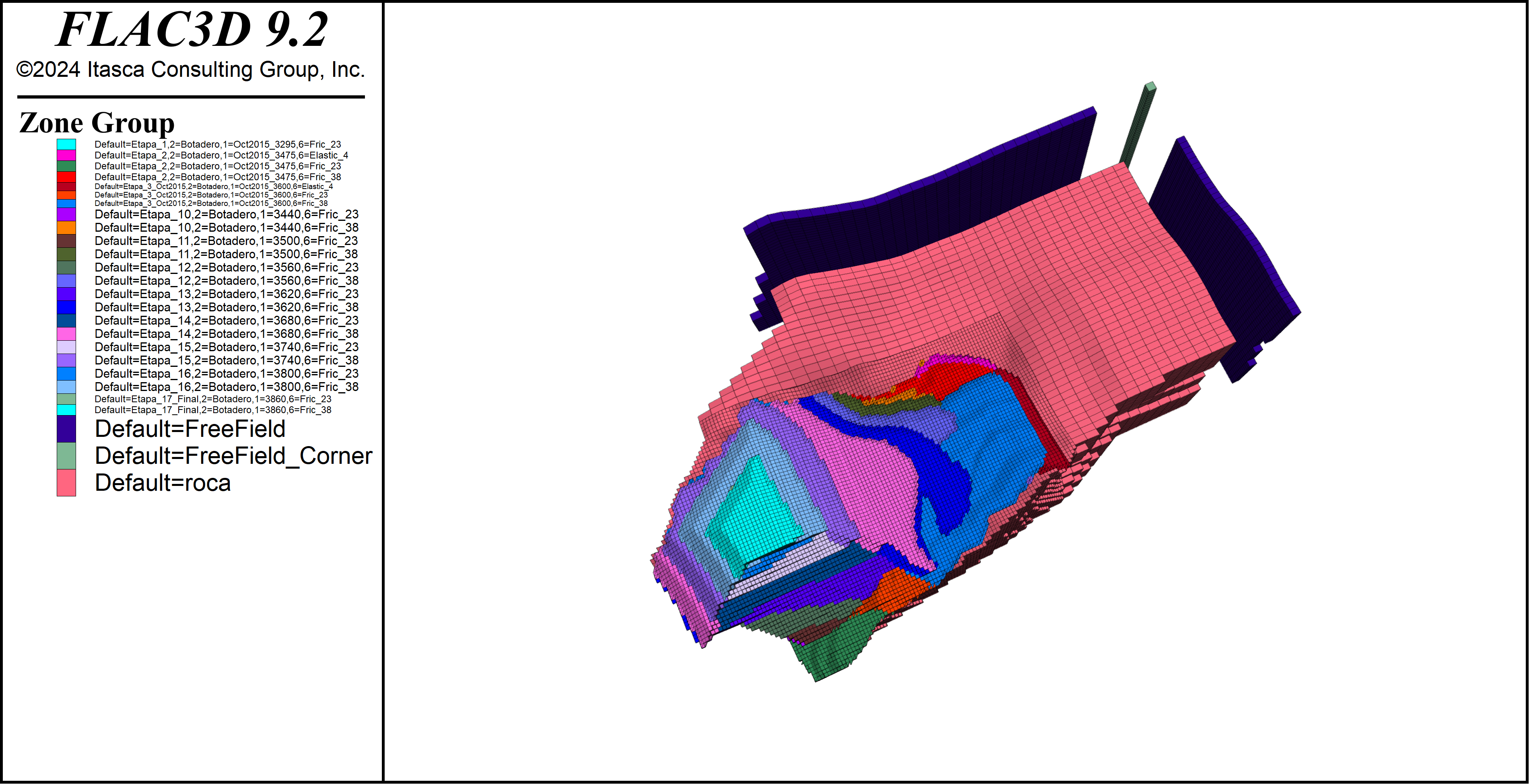

After the domain partition and model distribution, all the nodes (leader and followers) in the cluster environment hold one partial of the original model (Figure 2). Cycle is then performed on all the nodes and intra-cluster data synchronization takes place between every and every other node during the cycling.

Figure 2: Original model (above) and model partial on a node after domain partitioning (below).

Utilizing the above architecture, significant speedup can be achieved for the solving process. Figure 3 shows the results of a strong scalability test to solve a specific mechanical model with 8 million zones on multiple nodes using the cluster option. The model performs about 20 times faster on 32 nodes and scales from 4 to 32 nodes following a mostly linear pattern.

Figure 3: Strong scalability speedup, test performed on Amazon Web Services (AWS), node type m5a.4xlarge with 16 CPU Cores and 64 GB RAM per node.

The cluster option in FLAC3D does not affect the results. FLAC3D generates results identical to normal runs with the same level of accuracy and determinism. The save files are also cross-compatible between normal FLAC3D and the cluster option.

Supported FLAC3D Features

Not all the features in FLAC3D have been supported for cluster computing yet. Below is a list of currently supported features. Itasca plans to add more features in future updates.

Features supported:

Continuous mechanical analysis with both small and large strain configurations.

Discontinuous mechanical analysis with zone joints (note that zone joints currently work only in small strain configuration).

Open pit excavation with apply relax conditions and back-fill.

Dynamic analysis, including Rayleigh/Maxwell damping settings and quiet/free-field boundary condition.

Fluid undrained pore pressure response (both model configure fluid-flow and model fluid active off required).

General FISH callback functionalities. Note: nulling or deleting model topology during cycling is not yet supported.

All Itasca constitutive models.

Factor-of-safety analysis.

General FLAC3D functionalities: create/modify models, save/restore, pre-processing and post-processing (history, plot, etc.).

Local model partial visualization: the local model partials on every node can be visualized and plotted during cycling (GUI version of FLAC3D needed).

Result determinism:

Features planned as future updates:

Structure elements.

Thermal analysis.

Fluid analysis with active fluid flow.

Using the Cluster Option in FLAC3D

To use FLAC3D in cluster computing environments, it is important to ensure that both the FLAC3D instances can be launched correctly, and that the model has been configured to activate the Cluster option.

Launching FLAC3D in Cluster Mode

There are three things to check before launching FLAC3D in a cluster environment:

A compatible MPI implementation has been installed on all the nodes.

The same version of FLAC3D has been installed on all the nodes.

SSH connection between nodes available.

Note: not all the MPI implementations are supported. Please check the Frequently Asked Questions for a complete list of support information.

To use the cluster option, launch the FLAC3D instances using the MPI job launcher mpirun/mpiexec (or job schedulers compatible with mpirun) with an extra commandline argument mpi. The typical command to launch multiple FLAC3D console instances in a cluster environment with OpenMPI is:

$ mpirun -n <number-of-processes> --host <list-of-cluster-nodes> flac3d9_console mpi “datafile”

It is also possible to launch the GUI version of FLAC3D on the leader node (for visualization, etc) while using the console version on the followers:

$ mpirun -n 1 --host <leader> flac3d9_gui mpi “datafile” : -n <number-of-processes-1> --host <list-of-followers> flac3d9_console mpi

The cluster option can be used in cloud computing environments in the same way as local clusters. Itasca has been cooperating with Rescale to provide a pre-configured easy-to-use cloud-based solution. See here for a tutorial on using Rescale. Users can also create their own solutions using any cloud provider like Amazon Web Services (AWS) or Microsoft Azure.

Configuring the Model for Cluster Mode

To run the model in a cluster environment, it is necessary to configure the model by adding the command model configure cluster into the project datafile. This command activates the FLAC3D instances for cluster mode and initiates the model compatibility checks. An example is shown below.

Note that model configure cluster can be given even if a run is not being done under MPI to allow checking a model for MPI compatibility ahead of time.

model new

model configure cluster ; necessary configuration for cluster mode

;model configure thermal ; incompatible with cluster mode

zone create brick size 100 100 100

zone cmodel assign mohr-coulomb

zone property bulk 3e8 shear 2e8 coh 1e6 fric 15 dens 1000

zone face skin

zone face apply velocity-normal 0 range group 'West'

zone face apply velocity-normal 0 range group 'South'

zone face apply velocity-normal 0 range group 'Bottom'

model gravity 10

model cycle 100

exit

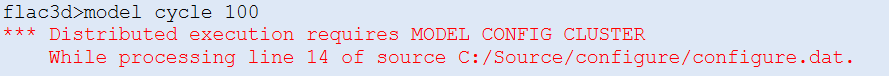

If the FLAC3D instances have been launched correctly in cluster mode but the model is not configured, users receive an error message when trying to cycle (Figure 4).

Figure 4: Error message for cluster mode configuration.

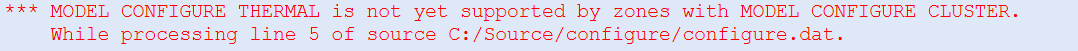

If unsupported FLAC3D features are used within cluster mode, users will receive an error message during command execution (Figure 5).

Figure 5: Error message for unsupported feature in cluster mode.

No further datafile edit would be necessary for cluster mode should the user not intend to use unsupported cluster mode features.

Cluster Option Licenses

Itasca provides three tiers of cluster option licenses, based on the number of FLAC3D instances used:

Cluster-Basic: up to 8 instances.

Cluster-Advanced: up to 16 instances.

Cluster-Professional: up to 32 instances.

If the user would like to run FLAC3D more than 32 instances simultaneously, please contact Itasca for further assistance.

Cluster Option Examples

Open Pit Excavation in Cluster Mode

This example takes the base model from Open Pit Mine with Faults Open Pit Mine with Faults (FLAC3D), restores the initial save file, and runs the excavation stage in cluster mode with four nodes. A comparison is made with the original (no cluster) run to compare results. The only necessary change made to the excavate-cluster datafile is the model configure cluster command.

excavate-cluster.dat

model restore 'initial'

model configure cluster ; necessary for cluster mode

zone gridpoint initialize displacement 0 0 0

zone gridpoint initialize velocity 0 0 0

zone initialize state 0

; take history of point at the edge of the pit

zone history displacement position 2465,-400,-77

zone relax excavate step 500 range group 'pit'

model solve cycles 501 and ratio 1e-5

model save 'excavate-cluster'

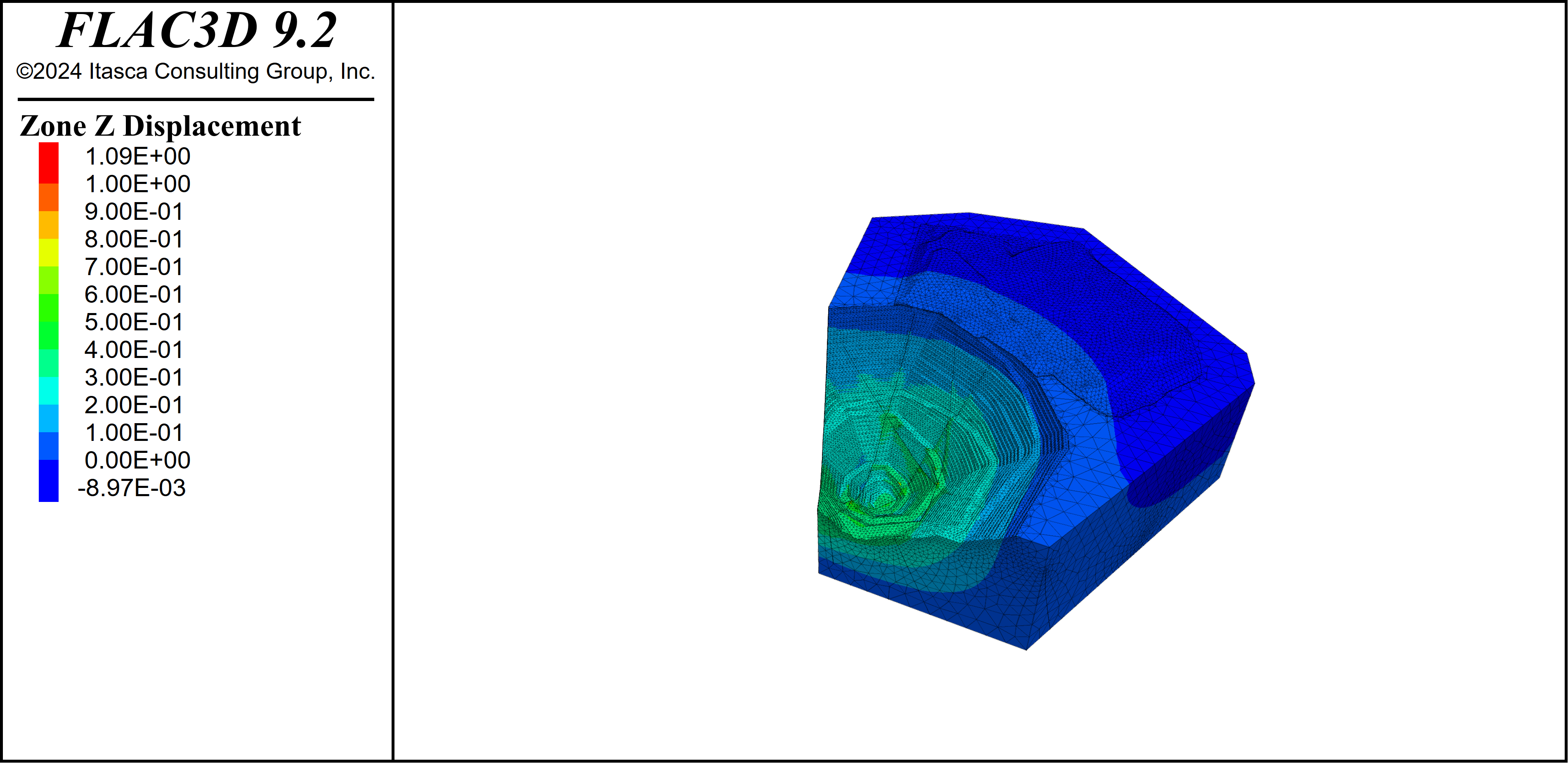

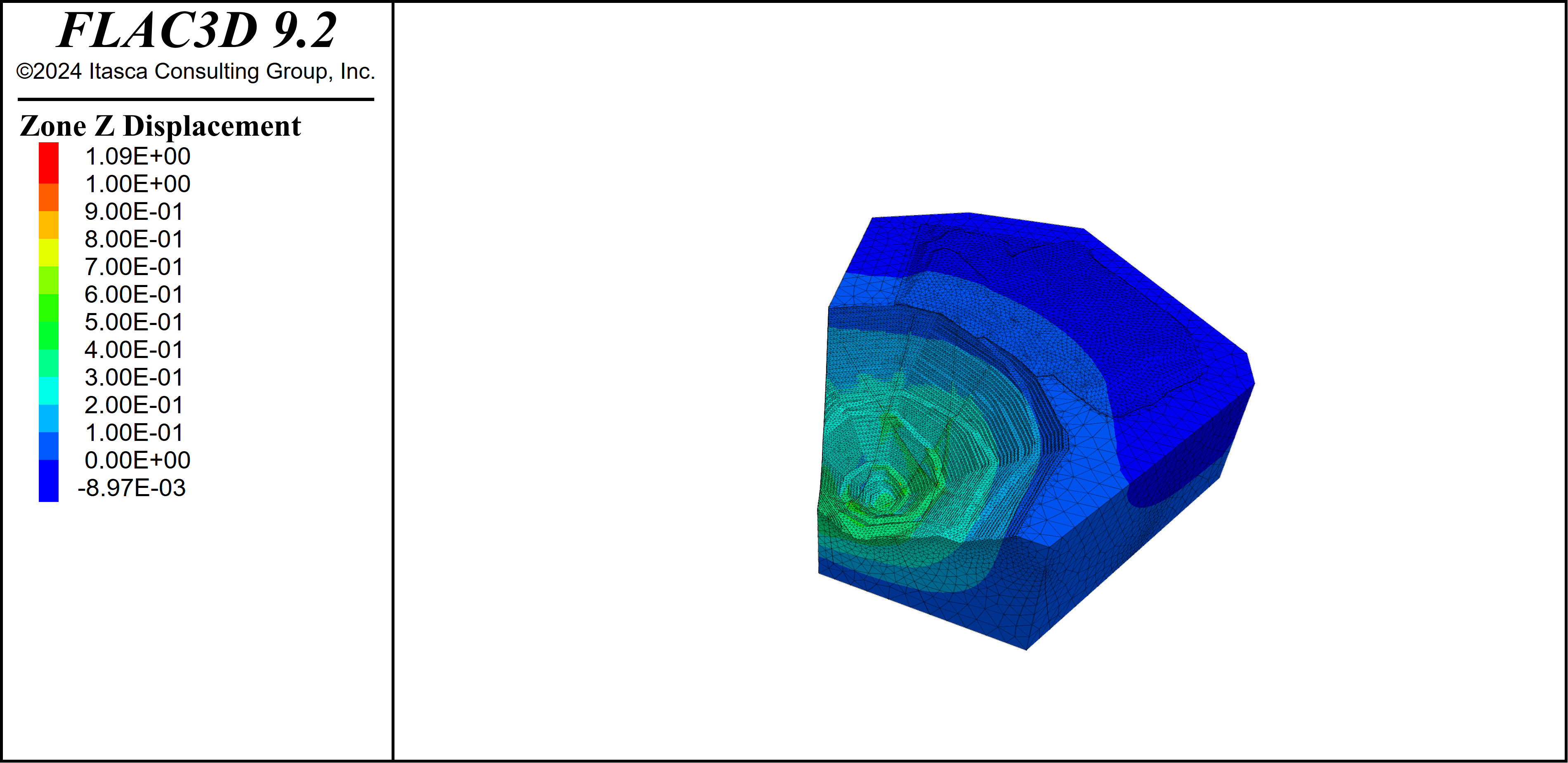

The vertical displacement of both normal and cluster run is shown in Figure 6. The results coincide with each other. A datafile to check result compatibility is provided below.

Figure 6: z-displacement for normal (above) and cluster (below) excavation.

model restore "excavate"

fish define get_disp

global _vd1 = gp.disp.z(gp.near(2465,-400,-77))

end

[get_disp]

model restore "excavate-cluster" SKIP FISH

fish define check_disp(value,tol)

global _vd2 = gp.disp.z(gp.near(2465,-400,-77))

global _err = math.abs(_vd1-_vd2)/math.abs(value)

if _err > tol then

system.error = 'Z-displacemnet test failed'

end_if

end

[check_disp(_vd1, 1e-6)]

Maxwell Damping Analysis in Cluster Mode

This example takes the base model from Site Response using Maxwell Damping, restores the static stage save file, and runs the Maxwell damping stage in cluster mode with four nodes. The only necessary change made to the siteResponseMaxwell-cluster datafile is the model configure cluster command.

model restore "static"

model configure cluster ; necessary for cluster mode

zone gridpoint initialize displacement (0,0,0)

zone gridpoint initialize velocity (0,0,0)

zone initialize state 0

;

model dynamic active on

;

; Histories

history delete

model history name='time' dynamic time-total

zone history name='a-acc-x' acceleration-x position 600 0 10

zone history name='a-dis-x' displacement-x position 600 0 10

zone history name='b-acc-x' acceleration-x position 440 0 10

zone history name='b-dis-x' displacement-x position 440 0 10

zone history name='c-acc-x' acceleration-x position 305 0 2.5

zone history name='c-dis-x' displacement-x position 305 0 2.5

zone history name='d-acc-x' acceleration-x position 170 0 -5

zone history name='d-dis-x' displacement-x position 170 0 -5

zone history name='e-acc-x' acceleration-x position 80 0 -5

zone history name='e-dis-x' displacement-x position 80 0 -5

;

;;; Target 5% Maxwell Damping

zone dynamic damping maxwell 0.0385 0.5 0.0335 3.5 0.052 25.0

;

zone face apply-remove

zone face apply quiet range group 'Bottom'

table 'acc' import "Coyote.acc"

[table.as.list('vel') = table.integrate('acc')]

[global mf = -den1*Vs1*g] ; with g since acceleration is in g.

zone face apply stress-xz [mf] table 'vel' time dynamic range group 'Bottom'

zone face apply stress-xy 0.0 range group 'Bottom'

zone face apply stress-zz 0.0 range group 'Bottom'

zone dynamic free-field plane-x

zone gridpoint fix velocity-z range group 'Bottom'

zone dynamic multi-step on

;

history interval 40

model dynamic timestep fix 5.0e-4 ; so that output time interval is 2e-3

model solve time-total 26.83

model save "dynamic-maxwell-cluster"

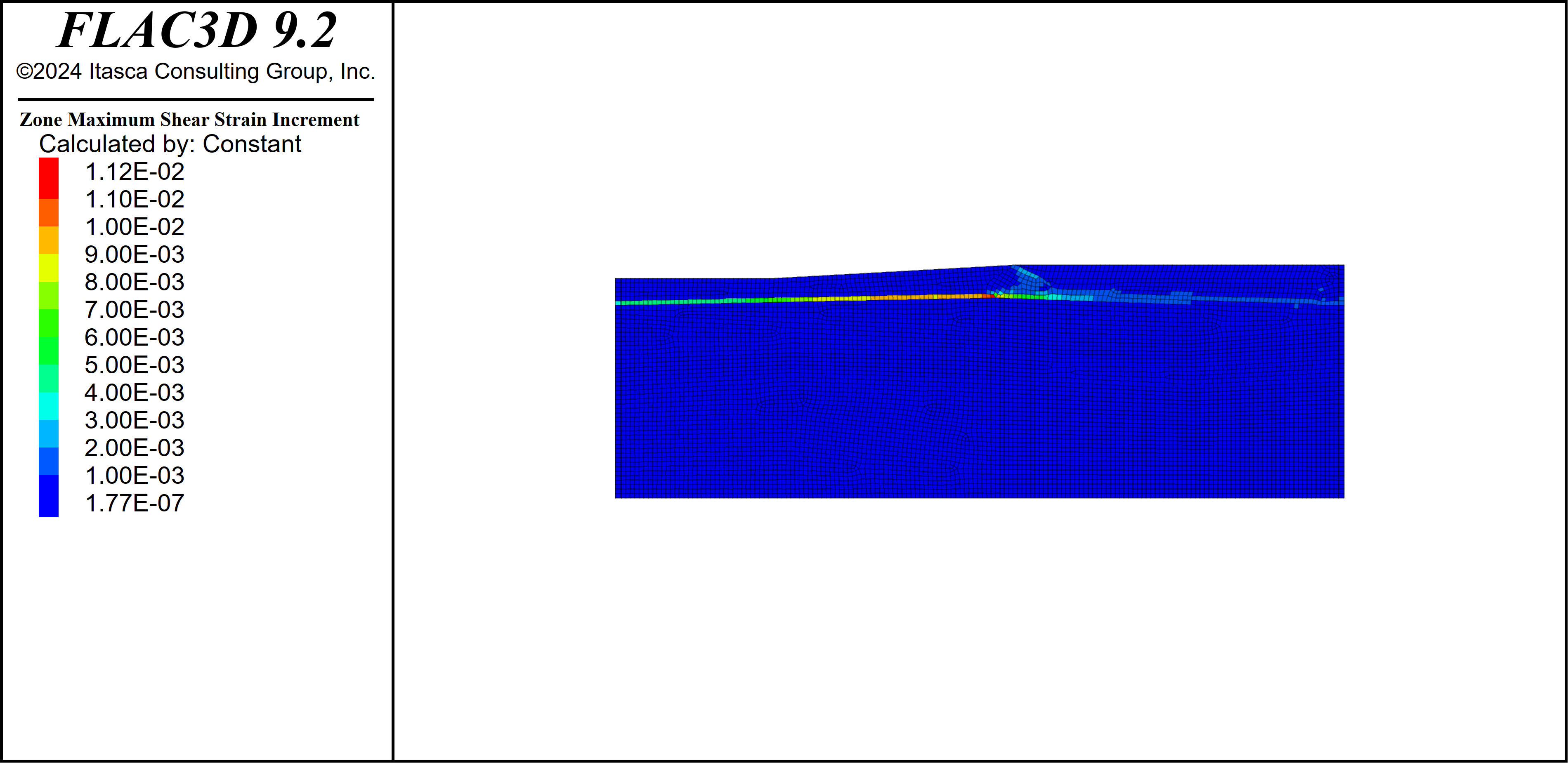

Figure 7: Max shear strain increment for cluster run.

Frequently Asked Questions

List of Supported MPI Implementations

The cluster option in FLAC3D relies on MPI for data communication. The Message Passing Interface (MPI) is a standardized and portable communication protocol used for programming parallel computers. It allows multiple processes to communicate with each other by sending and receiving messages, which is essential for cluster computing architectures.

As MPI is only a protocol, users also need the corresponding implementation in binary form. Among the many implementations available for MPI, FLAC3D cluster option officially supports:

OpenMPI, version 4.1.2.

Microsoft MPI (MS-MPI), version 10.1.3.

Note: It is possible that the FLAC3D cluster option could also work with other MPI implementations and versions. The list above is for official support purposes.

Supported Operating Systems

The FLAC3D cluster option officially supports both Ubuntu Linux 22.04 LTS and Windows.

Since the OpenMPI v4.1.2 and SSH server are both system-level dependencies on Ubuntu Linux 22.04 LTS, no extra action is needed for deployment. It is generally suggested to run FLAC3D cluster mode under Linux for ease of setup.

It is also possible to use the FLAC3D cluster option on Windows machines. This requires installing the MS-MPI and SSH server system components on all the nodes in a cluster environment. Note that the mpirun job launcher commands for MS-MPI can be different from OpenMPI commands.

The FLAC3D cluster option requires all the instances to be launched from the same operating system. It is not possible to connect a Windows FLAC3D instance with a Linux one.

Hardware Requirements for Cluster Option

There is no additional hardware requirement for the FLAC3D cluster option. However, it is up to the user to make sure that the nodes used for the cluster option have enough RAM to hold the model and cycle.

Unfortunately, there is no way for Itasca to make accurate RAM usage predictions for every user case. But some general rules apply:

FLAC3D cluster mode runs under a leader-follower architecture. Since the model processing and domain partitioning are both managed on the leader node, the leader node needs enough RAM to hold the whole model and extra RAM space is also required for domain partitioning and data distributing/gathering.

The follower nodes only hold one partial of the original model each. They do not need as much space as the leader node.

The cluster mode also benefits from faster inter-node connection, as it reduces the communication overhead and speeds up the performance.

How to Prepare the Model for Cluster Mode

While the cluster mode and normal FLAC3D run generate identical results, one notable difference between them is that the cluster mode has an limitation on model input: it requires the input zone model to be topologically contiguous:

No gap between model elements (zones and gridpoints).

No overlapping model element.

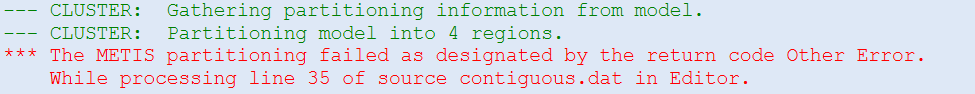

It is possible to cycle a model with non-contiguous mesh in a normal FLAC3D run, but cycling in cluster mode gives out an error during domain partitioning (Figure 8).

Figure 8: Non-contiguous mesh error.

Use the Cluster Option Effectively

While the FLAC3D cluster mode has been optimized for performance, the ultimate speedup depends on many factors, and ineffective usage of the cluster mode can slow down the solving process significantly. A few points are listed below to help the users get better performance with the cluster mode.

Create quality mesh: while non-conformal meshes and bad quality zones can work in cluster mode, they usually introduce more model elements (attach conditions, joints, etc.) which increases the memory usage and slows down cycling.

Avoid unnecessary cycle commands: while there are user cases for doing small step model cycles as validation, every cycle command leads to a complete pair of data distribution/gathering in cluster mode, which introduces unnecessary overhead and slows down the performance.

Launch FLAC3D instances in cluster mode wisely: all the examples in this documentation use 1 FLAC3D instance per node. However, there is no actual limitation on how many instances can be launched on a single node. While there are certain situations in which more than one instance per node could fit better, the user should always handle this strategy with cautious. As every FLAC3D instance also runs multi-threaded, launching more than one instance on a single node can easily add extra communication overhead and slow down the solving process.

| Was this helpful? ... | Itasca Software © 2024, Itasca | Updated: Dec 05, 2024 |